It’s no secret, and it certainly doesn’t happen by accident: in 2017, ranking in major search engines such as Google has become a science, the science of knowing your competitors, of knowing your customers/visitors and of course of knowing which SEO practices to ditch and which to keep and improve.

What used to be a handful of on-page, off-page, and architecture factors has evolved into hundreds of signaling attributes fed through a complex algorithm that is constantly being refined and learning from your behavior.

With search engines becoming smarter and more sophisticated with each passing day, it has become crucial for businesses seeking the benefits of organic search engine optimization to comprehend the undertaking required to rank highly in competitive search verticals.

This post aims to step you through dissecting your organic search competitors in SERPs so you can better understand the time, money, and effort you will need to put forth to achieve your target rankings.

This post is also a piece of the larger puzzle from SEO strategy guidelines for online businesses.

Table of Contents

- 1 The Lay of the Land – Understanding Your Competitive Landscape

- 2 Getting Started with the SEO Competitive Analysis

- 3 Anatomy of a Keyword

- 4 Looking at Competitors’ Authority and Trust

- 5 DA to rule them all

- 6 Data Mining – Release the Scrapers

- 7 Competitor Analysis and Opportunity Identification

- 8 Usurp The SERP – Planning the Takeover

- 9 Wrapping Up

The Lay of the Land – Understanding Your Competitive Landscape

In the same way a landscape architect needs to understand the elements of the property he is designing for in order to achieve the goals of the project, you need to understand how your website stacks up to your competitors, what gold (or iron) may be underneath the surface, and how your surrounding environment can impact the outcome of your campaign.

First, you need to know what you are getting yourself into. Before you go throwing your clients money at writing content and building links relationships targeting a specific set of keywords, you need to assess the foundations and maturity of your competitive market.

Think of yourself as an asset portfolio manager, because that’s what you are. In the same way fund managers need to analyze company financial data and operational performance before making investment decisions, you need to analyze your SEO competitors to inform your investment decisions.

In 2017, there are still a lot of companies that dive right into a new market and immediately start spending money on pay-per-click advertising, landing page design, and social media marketing without really identifying if and where the opportunity lies.

This whole approach of ready, fire, aim may work for some but it is the exception and not the rule.

Instead you should take steps to calculate your risk:

- Analyze your competitors within your target search vertical

- Identify weaknesses in either under-served segments or for under-optimized keywords

- Know your costs

- Project your returns

- Create a realistic timeline for both implementation and break-even

Getting Started with the SEO Competitive Analysis

Once you have completed your initial keyword research, review your keywords to identify which are most relevant for your business and which have the highest volume:

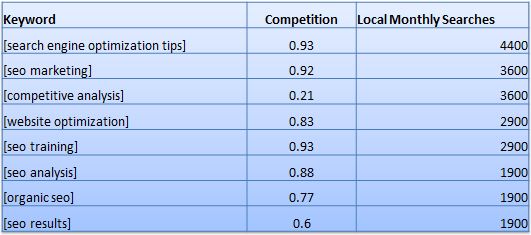

1. Start by identifying your core set of target keywords, no less than 5 and no more than 10.

For example, if I were to pick the core keywords that best represent this post and have the highest monthly search volume, they would be:

2. Next, use Keyword Planning Tool to find ideas that Google identifies as semantically related. Back in the days when Google’s Contextual Targeting was in Beta I ran the above keyword list through and drilled down to the second tier, see below:

Now, similar grouping can be acquired from Google Keyword Planner, though, starting August 2016, low AdWords accounts no longer have access to exact search volumes.

3. Group your target keywords into 3 different buckets based on volume. This usually separates out head, body and long tail terms.

This gives you a sense of what kind of monthly search volume you can hope for if you are able to rank within the top 1-5 spots, with the #1 spot capturing on average 36% of clicks.

4. Optimize from tail to head.

For anyone just getting started with SEO this may be a new concept, but you may have also seen or read about ‘the long tail of search.’

Essentially what you are doing here is focusing on long-tail terms which are highly relevant to your business and also offer opportunities to build content for the larger, more competitive head terms.

In 2017, long tails have become even more important for SEOs. Let’s take a closer look at this!

Anatomy of a Keyword

With your core keywords grouped into buckets based on exact local monthly search volume, you will most likely notice that the longer the search query, i.e. the more words in the phrase, generally the lower the search volume. Voice Search and Mobile-First Index might drastically change that, but we’re not there yet.

As a general rule of thumb the more words in the query the easier it is to rank for that phrase. This is certainly not an absolute but has been the case for a majority of the terms I have worked with. *If you know of examples where this theory does not hold up, please share in the comments.

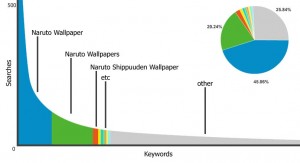

Below is a graphical representation of what is meant by the long tail of search:

Image credit: Marketing Hub

As you can see above, shorter query phrases receive the most volume, these are your head terms, followed by body (usually 3-4 words) and then the tail which usually consists of keywords that contain 5+ words.

When optimizing any website digital asset for the long-term it is important to capture the low-hanging fruit to begin creating conversions as soon as possible.

This is best done by optimizing backwards; from tail to head versus the other way around.

What I mean by that is to focus on acquiring traffic for the relatively non-competitive terms and working your way up the tree, by optimizing for your long-tail terms and building toward your head terms over time.

What this allows you to do is create content that is targeted to rank for your long-tail terms in the short-term while building relevance and authority for your body, and ultimately head terms, since these are often included in the long-tail queries.

Due to Google bringing constant changes to SERP, you will discover that the number of competing pages for some keywords is no longer available. For example, some time ago I’ve learnt that there were 349 million pages competing for the #1 spot on Google for the head term SEO Blog . But for the very long-tail term SEO blog focused on user experience philadelphia there were only 12.6 million competing pages, and this phrase contained the head term. Now, if you search for “seo blog”, you will no longer see total number of competitors:

If you will encounter similar situations, I think is fair to assume that those keywords are highly competitive and nowhere near the concept of low-hanging fruit.

Looking at Competitors’ Authority and Trust

The next concept that it is important to understand is Domain Authority, often referred to as DA. This is a very handy metric created by SEOmoz that essentially assesses a domain’s potential to rank. The higher the DA and PA (Page Authority), generally the easier it is for the website to rank for a keyword.

It’s worth noting that there are SEOs who prefer using TF (Trust Flow) and CF (Citation Flow) from Majestic when checking their competitors authority and trust levels.

DA/PA and CT/TF are all third party metrics meant to somehow replace the void left by the famous Google PR (Page Rank). Obviously none of them could replicate PR real values of nowadays (as those values are being kept secret for quite a while now). It is difficult to recommend one tool over another, but for this analysis I went with Moz DA.

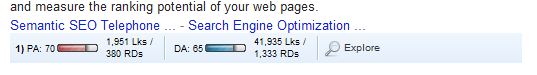

In the screenshot below I searched for ‘seo analysis,’ notice that seoworkers.com (#1 spot) is outranking a near exact match domain, seo-analysis.com (#9 spot), who also has more links, 65,341 vs. 41,935, most likely due to the fact that this domain has significantly higher domain authority; 65 compared to 27.

As you can see in the screenshot above, for the query ‘seo analysis’ the DA ranges from 27 to 87.

Please also note that in this example the site with the highest DA, SEOchat.com, is also ranking in the last position (#10). This is a perfect example of how there really is no one metric for determining SEO competitiveness.

Taking a closer look at the numbers in the screenshot you will notice that SEOchat.com only has 12 links coming into this page from 2 domains. Also please note that the only site outranking SEOchat.com with less page links (having only 1) is built on Google Sites, giving it a DA of 100.

DA to rule them all

There are varying opinions as to the true power of domain authority, but in my opinion, it is more or less the holy grail of SEO.

Now that you have your core set of keywords, let’s take a look at the DA of the competing websites. This is easily done in Chrome using the Mozbar extension, which will display a metric bar for each search result right underneath the meta description, as you may have noticed in the screenshot above.

Even though it is not a surefire indicator of ranking potential, I still hold that it is important to first look at competitor’s DA when starting your SEO analysis. In my opinion this will allow you to gain at least some initial visibility into your ranking potential.

Ranking potential is an assessment of where you can realistically hope to get your rankings to; if the top 5 websites have a DA of 90+ and your website is at 20, it is not realistic to think that you will be able to grab one of the top 5 spots in no time. Again this is not always the case, but tends to generally be a safe standard of procedure.

Data Mining – Release the Scrapers

In order to conduct the analysis we are going to need to go out and grab some data; what we are going to focus on for now are the basic elements that contribute to domain authority.

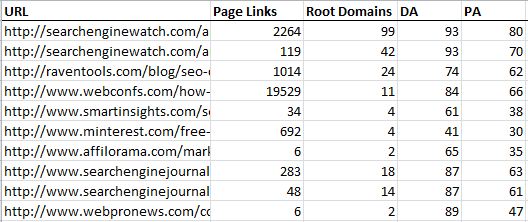

For the purposes of this analysis I am going to pick a body keyword and look at the top 10 ranking websites.

The keyword I will be using is SEO competitor analysis

Here is the page one SERP on 8/3/12:

Next we need to scrape for the data we want to analyze. Fire up your favorite scraping tool, and if your just getting started I recommend checking out Eppie’s slidedeck: The SEO’s Guide to Scraping Everything.

The data elements we are after are:

- URL

- Domain Authority

- Page Authority

- Number of indexed links

- Number of linking root domains

Compile these in Excel for analysis, which should look something like this:

Next we will look at the types of links pointing to these websites. You can do this through Open Site Explorer report (MOZ), Site Explorer (Ahrefs), Backlink Checker (Majestic), Backlink Audit Tool (SEMrush).

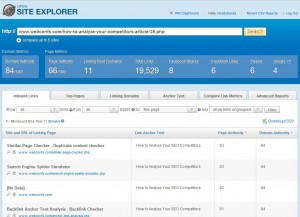

This needs to be done for each ranking URL, but for the sake of this post, I am going to pick only one. Instead of choosing the big brands, Search Engine Watch and Raven Tools, I’m going to use Web Confs; www.webconfs.com/how-to-analyze-your-competitors-article-39.php

Now we need to generate the Open Site Explorer report, which will look something like this:

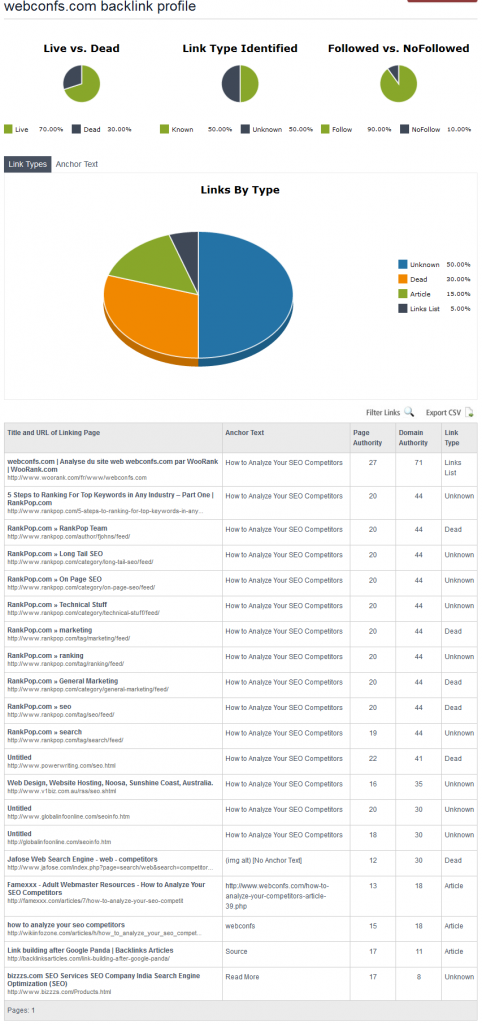

I’ve downloaded the CSV and upload into Link Detective. This returned a breakdown of all of the links by type, and look something like this:

Link Detective is not available anymore, but there is no need for it in 2017, as many of the tools listed above (Ahrefs, Majestic, etc) already provides you with the relevant data & charts.

This type of report are helpful because they show how your SEO competitors are building their links, and whether or not they are using spam tactics to manipulate their link profile.

From the above report we can quickly identify some actionable opportunities:

- Approximately 30% of the sample links are broken. This is an opportunity for some broken link building, and Anthony Nelson can get you started.

- 15% are from article directories and 5% are from link lists. These are not hard to trump with more authoritative links.

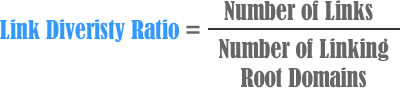

The next thing I recommend is to take a look at what I refer to as the domains link diversity ratio or LDR, a very simple equation for identifying how diverse the website’s backlink profile really is.

This produces a score between 1 and n, with 1 being a perfect score representative of a website where every inbound link is from a unique domain, which is not really possible outside of some imaginary SEO vacuum.

What you are looking for are the outliers; the highs and lows. High scoring websites can be really high, sometimes to the tune of 257.14, which would be a website with 18,000 indexed links coming from only 70 websites. The lower your LDR the better.

Again this is quick and dirty, but can provide some quick visibility into the legitimacy of the links. Often time’s sites with a very high link diversity ratio are buying links, using link networks, or creating microsites to engage in link schemes.

Based on the data from OSE, the link diversity ratio for the webconfs.com competitive analysis URL is 1,775.36 (19,529 links coming from 11 linking root domains) and 156.28 at the top-level domain (1,802,896 links from 11,536 domains)

Now I personally have nothing against webconfs.com, but their link profile for this page is relatively weak. *Hint* if you operate an established SEO Blog (minimum DA ? 30) this is traffic for the taking.

To take link analysis one step further you should look at a more meaningful measure of the legitimacy of a website’s links by looking at the number of linking C-Blocks, or the number of websites hosted on the same IP address.

Nick W. over at ThreadWatch has created a nice free tool for bulk checking for duplicate Class C blocks.

This is most useful if you have identified a handful of competing domains that you believe may be interconnected and managed by the same company.

Competitor Analysis and Opportunity Identification

To use an old saying, now we are getting down to the meat and potatoes.

So what do you do with all of the data you have now gathered and some of the surface opportunities you have begun to identify?

Start mapping out a plan for action.

The easiest places to start are obviously scooping links out from your competitors that are either broken, of low-quality, or from the same C-Block.

As for the more competitive terms in your buckets, the ones that will really drive qualified traffic to your website, you need to take a more scientific approach, and here is what I mean.

Usurp The SERP – Planning the Takeover

The example I have provided is very small, to the extent that the nitty gritty analysis could be done manually without taking excessive amounts of time.

However, if you needed to identify tens of thousands of keywords to invest in as part of an enterprise SEO strategy, this would require a more scalable approach to evaluating individual keyword opportunities.

This can be achieved through designing an opportunity evaluation model.

Please let me preface by saying this is not a complete model, it is just an approach to analyzing competitive SEO drivers on a large-scale. However, if refined to project revenues within an order of magnitude, it can become a powerful business driver.

Due to intellectual property rights I can only share some of the model. I believe it is enough to get you thinking and moving in the right direction.

The heuristics I use in the model are as follows:

- Domain Authority (DA) – a logarithmic measure of a domain’s authority and ranking potential.

- Page Authority (PA) – a linear measure of an individual URL’s link and relevancy strength.

- Number of competing pages with target keyword in title (CP) – relative measure of SERP competitive landscape.

- Monthly Search Volume (MSV) – average exact local monthly search volume for individual keyword.

- Click-Through Rate (CTR) – average SERP click-through rate for URL’s ranking in position ‘y’.

- Conversion Rate (CR)- average conversion rate for target goal.

- Monetization metric (M)- Measure of approximate revenue per conversion, for eCommerce this may be average order size, for display advertising this would be eCPM, or earnings per 1,000 pageviews.

- Discount Rate (dr) – Rate at which the heuristic is discounted to adjust the relative significance within the model.

For DA and PA I used the average of the top n sites. N will vary in size based on your confidence interval requirements.

The model is then built on a spreadsheet so each of the heuristics can be driven by the assumptions, i.e. cost per content, average cost per link, SERP CTR, avg revenue per page or per conversion, etc. And the assumptions can be adjusted as historical data is collected.

What this allows you to do is built a large-scale model for evaluating each keyword, projecting the cost, time, and potential return based on using discount rates to adjust the relative importance of the varying competitive factors; DA, PA, and links. And placing this into a model that discounts each of these heuristics accordingly.

I realize this may be hard to visualize as it is not common in the SEO industry to talk about automating keyword analysis for thousands of keywords.

As you design your own models that are representative of your business, make sure you take the time to define your success criteria. In other words, set S.M.A.R.T. goals before you start your campaigns. This will allow you to watch the needle as it moves, and understand which direction it’s moving in.

It is not going to be an overnight success. It may take months of adjusting your assumptions and discount rates before it starts projecting accurate costs and returns.

Lastly, this does not make sense for everyone. Spending the time and energy to build an analysis model like this really only make sense if you are working on a project that requires you to look at thousands of new keywords each month.

Otherwise manual analysis and really getting a feel for your search vertical will probably give you a better sense of where to focus your optimization efforts then spending months tuning a formula.

Take the time to identify and accurately track your business’s true key performance indicators.

Wrapping Up

I apologize for speeding through what is probably the most captivating part of this post, the opportunity model, but this was intentional. This concept is an important and technical approach to enterprise SEO which is too complex to properly explore within this post.

It is important however to keep in mind that starting 2017, Google’s shifts and tweaks on ranking algorithms are happening more often. What you’ve learn from an SEO competitor analysis may no longer be true after a couple of months. Competitor analysis in SEO is great for getting valuable insights, but it won’t give you a remarkable boost unless you also provide unique value to your visitors. So keep an eye on your competitors, while constantly working on ways to improve UX on your site.

In the meantime I hope have provided you with a straightforward path to begin identifying and analyzing your SEO competitors. You can also check the post in which I talk about Google search engine optimization with an website optimizer and you can expect a dedicated post on how to design and build keyword opportunity models in the near future.

As always any thoughts, reactions, or feedback is welcome in the comments. I do my best to respond to each one.

Thanks for reading.